| ESP32 | 您所在的位置:网站首页 › stm32 服务器视频传输 › ESP32 |

ESP32

|

前言:

由于项目需要,最近开始开坑关于ESP32-CAM系列的RTSP网络摄像头系列,该文章为该系列的第一篇文章。用于记录项目开发过程。 本文解决的问题:使用ESP32-CAM获取图像数据,并通过RTSP协议将获取到的视频流传输到上位机进行显示。 具体实现:使用ESP32-CAM进行视频推流,python端作为rtsp拉流,其中ESP32-CAM使用arduinoIDE开发,使用了安信可的支持库。支持包安装网址: 拉流效果:

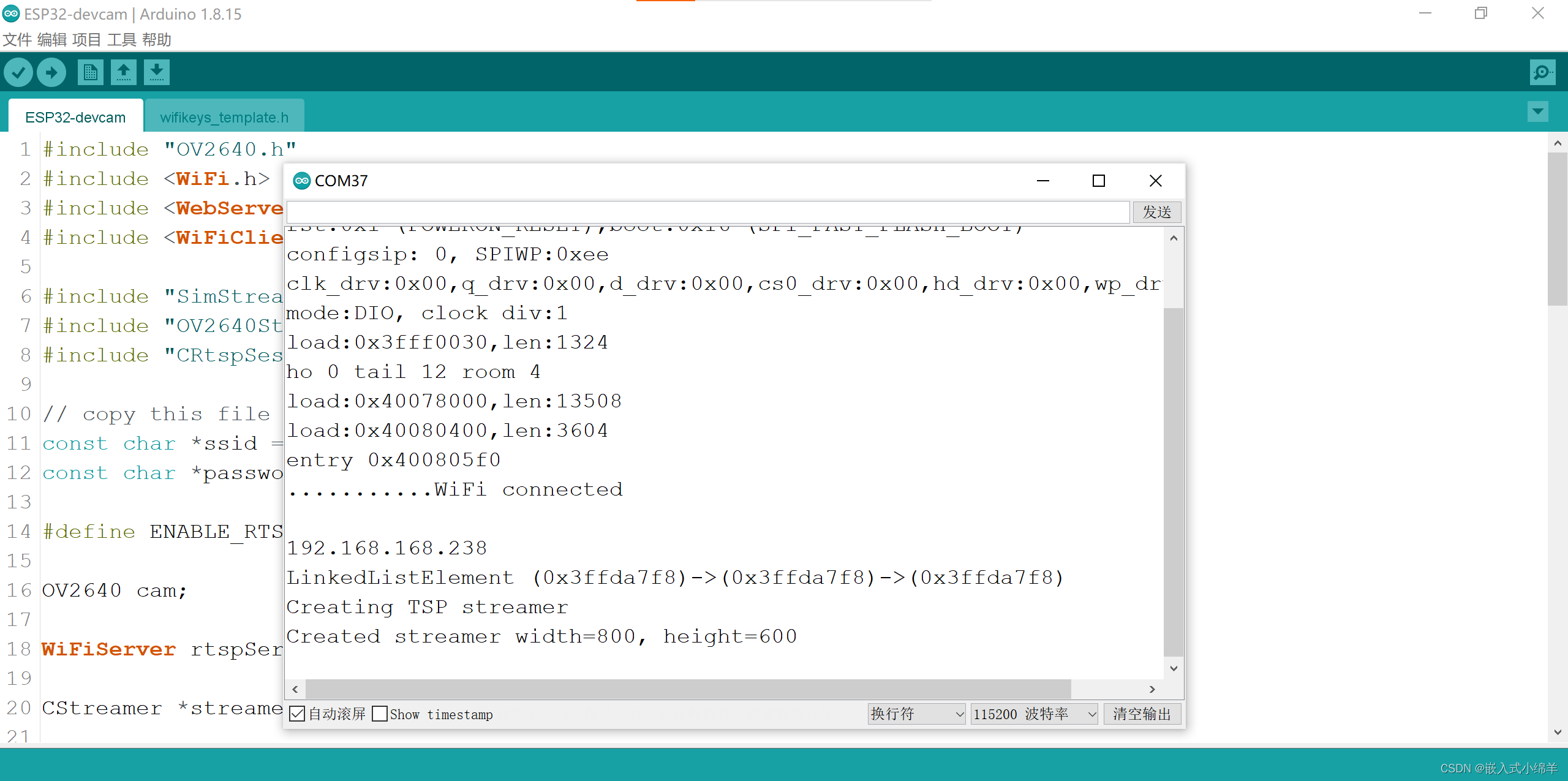

官方示例代码: #include "OV2640.h" #include #include #include #include "SimStreamer.h" #include "OV2640Streamer.h" #include "CRtspSession.h" #define ENABLE_RTSPSERVER OV2640 cam; #ifdef ENABLE_WEBSERVER WebServer server(80); #endif #ifdef ENABLE_RTSPSERVER WiFiServer rtspServer(8554); #endif #ifdef SOFTAP_MODE IPAddress apIP = IPAddress(192, 168, 1, 1); #else #include "wifikeys_template.h" #endif #ifdef ENABLE_WEBSERVER void handle_jpg_stream(void) { WiFiClient client = server.client(); String response = "HTTP/1.1 200 OK\r\n"; response += "Content-Type: multipart/x-mixed-replace; boundary=frame\r\n\r\n"; server.sendContent(response); while (1) { cam.run(); if (!client.connected()) break; response = "--frame\r\n"; response += "Content-Type: image/jpeg\r\n\r\n"; server.sendContent(response); client.write((char *)cam.getfb(), cam.getSize()); server.sendContent("\r\n"); if (!client.connected()) break; } } void handle_jpg(void) { WiFiClient client = server.client(); cam.run(); if (!client.connected()) { return; } String response = "HTTP/1.1 200 OK\r\n"; response += "Content-disposition: inline; filename=capture.jpg\r\n"; response += "Content-type: image/jpeg\r\n\r\n"; server.sendContent(response); client.write((char *)cam.getfb(), cam.getSize()); } void handleNotFound() { String message = "Server is running!\n\n"; message += "URI: "; message += server.uri(); message += "\nMethod: "; message += (server.method() == HTTP_GET) ? "GET" : "POST"; message += "\nArguments: "; message += server.args(); message += "\n"; server.send(200, "text/plain", message); } #endif #ifdef ENABLE_OLED #define LCD_MESSAGE(msg) lcdMessage(msg) #else #define LCD_MESSAGE(msg) #endif #ifdef ENABLE_OLED void lcdMessage(String msg) { if(hasDisplay) { display.clear(); display.drawString(128 / 2, 32 / 2, msg); display.display(); } } #endif CStreamer *streamer; void setup() { #ifdef ENABLE_OLED hasDisplay = display.init(); if(hasDisplay) { display.flipScreenVertically(); display.setFont(ArialMT_Plain_16); display.setTextAlignment(TEXT_ALIGN_CENTER); } #endif LCD_MESSAGE("booting"); Serial.begin(115200); while (!Serial) { ; } cam.init(esp32cam_aithinker_config); IPAddress ip; #ifdef SOFTAP_MODE const char *hostname = "devcam"; // WiFi.hostname(hostname); // FIXME - find out why undefined LCD_MESSAGE("starting softAP"); WiFi.mode(WIFI_AP); WiFi.softAPConfig(apIP, apIP, IPAddress(255, 255, 255, 0)); bool result = WiFi.softAP(hostname, "12345678", 1, 0); if (!result) { Serial.println("AP Config failed."); return; } else { Serial.println("AP Config Success."); Serial.print("AP MAC: "); Serial.println(WiFi.softAPmacAddress()); ip = WiFi.softAPIP(); } #else LCD_MESSAGE(String("join ") + ssid); WiFi.mode(WIFI_STA); WiFi.begin(ssid, password); while (WiFi.status() != WL_CONNECTED) { delay(500); Serial.print(F(".")); } ip = WiFi.localIP(); Serial.println(F("WiFi connected")); Serial.println(""); Serial.println(ip); #endif LCD_MESSAGE(ip.toString()); #ifdef ENABLE_WEBSERVER server.on("/", HTTP_GET, handle_jpg_stream); server.on("/jpg", HTTP_GET, handle_jpg); server.onNotFound(handleNotFound); server.begin(); #endif #ifdef ENABLE_RTSPSERVER rtspServer.begin(); //streamer = new SimStreamer(true); // our streamer for UDP/TCP based RTP transport streamer = new OV2640Streamer(cam); // our streamer for UDP/TCP based RTP transport #endif } void loop() { #ifdef ENABLE_WEBSERVER server.handleClient(); #endif #ifdef ENABLE_RTSPSERVER uint32_t msecPerFrame = 100; static uint32_t lastimage = millis(); // If we have an active client connection, just service that until gone streamer->handleRequests(0); // we don't use a timeout here, // instead we send only if we have new enough frames uint32_t now = millis(); if(streamer->anySessions()) { if(now > lastimage + msecPerFrame || now < lastimage) { // handle clock rollover streamer->streamImage(now); lastimage = now; // check if we are overrunning our max frame rate now = millis(); if(now > lastimage + msecPerFrame) { printf("warning exceeding max frame rate of %d ms\n", now - lastimage); } } } WiFiClient rtspClient = rtspServer.accept(); if(rtspClient) { Serial.print("client: "); Serial.print(rtspClient.remoteIP()); Serial.println(); streamer->addSession(rtspClient); } #endif }对于ESP32的RTSP推流安信可官方已经给出了相应的示例代码,改代码使用宏定义的方式区分http和rtsp协议的不同代码。由于我们不需要用到基于http协议的视频推流,因此可以删去官方代码中不必要的部分。修改完的代码如下: ESP32部分的代码由官方示例代码修改而来。只保留RTSP推流部分。 #include "OV2640.h" #include #include #include #include "SimStreamer.h" #include "OV2640Streamer.h" #include "CRtspSession.h" // copy this file to wifikeys.h and edit const char *ssid = "YAN"; // Put your SSID here const char *password = "qwertyuiop"; // Put your PASSWORD here #define ENABLE_RTSPSERVER OV2640 cam; WiFiServer rtspServer(8554); CStreamer *streamer; void setup() { Serial.begin(115200); while (!Serial); cam.init(esp32cam_aithinker_config); IPAddress ip; WiFi.mode(WIFI_STA); WiFi.begin(ssid, password); while (WiFi.status() != WL_CONNECTED) { delay(500); Serial.print(F(".")); } ip = WiFi.localIP(); Serial.println(F("WiFi connected")); Serial.println(""); Serial.println(ip); rtspServer.begin(); //streamer = new SimStreamer(true); // our streamer for UDP/TCP based RTP transport streamer = new OV2640Streamer(cam); // our streamer for UDP/TCP based RTP transport } void loop() { uint32_t msecPerFrame = 100; static uint32_t lastimage = millis(); // If we have an active client connection, just service that until gone streamer->handleRequests(0); // we don't use a timeout here, // instead we send only if we have new enough frames uint32_t now = millis(); if(streamer->anySessions()) { if(now > lastimage + msecPerFrame || now < lastimage) { // handle clock rollover streamer->streamImage(now); lastimage = now; // check if we are overrunning our max frame rate now = millis(); if(now > lastimage + msecPerFrame) { printf("warning exceeding max frame rate of %d ms\n", now - lastimage); } } } WiFiClient rtspClient = rtspServer.accept(); if(rtspClient) { Serial.print("client: "); Serial.print(rtspClient.remoteIP()); Serial.println(); streamer->addSession(rtspClient); } }ArduinoIDE串口监视器输出的初始化信息,我们需要将ESP32的IP地址安装RTSP协议推流的格式填入Python拉流代码中。 # RTSP 地址 rtsp_url = "rtsp://192.168.168.238:8554/mjpeg/2"

由于Opencv-python集成了RTSP协议拉流的库函数,因此我们需要下载Opencv-python的支持包。可以打开Pycharm的Terminal使用pip指令快速下载。 pip install opencv-python 上位机python3拉流代码: import cv2 # RTSP 地址 rtsp_url = "rtsp://192.168.168.238:8554/mjpeg/2" # 打开 RTSP 视频流 cap = cv2.VideoCapture(rtsp_url) # 检查视频是否成功打开 if not cap.isOpened(): print("Failed to open RTSP stream") exit() # 循环读取视频帧 while True: # 读取视频帧 ret, frame = cap.read() # 检查是否成功读取视频帧 if not ret: break # 显示视频帧 cv2.imshow("RTSP Stream", frame) # 按 'q' 键退出循环 if cv2.waitKey(1) & 0xFF == ord('q'): break # 释放资源 cap.release() cv2.destroyAllWindows()PS:需要注意的是,进行RTSP拉流的上位机和推流的下位机都需要位于同一个局域网下才能进行推拉流传输。 |

【本文地址】

公司简介

联系我们